In this blog, we will observe how to do web scraping of a website from the scratch. A website, which we will extract is a computer online shop. Let’s get started.

We have used a Windows machine, therefore, some steps might differ on the other machines.

Making a Real Environment

Initially, make a newer directory named scrapy_tutorial, so move within directory:

mkdir scrapy_tutorial cd scrapy_tutorial

After that, run that command to make a real environment within venv directory:

virtualenv venv

Activate the real environment and return back to main directory:

cd venv/Scripts activate cd ../..

Installation Dependencies

Besides Scrapy, we will utilize a library named scrapy-user-agents. This is a library, which provides user-agent for the requests as well as handles the rotations.

It’s time to install the Scrapy first:

pip install Scrapy

After that, install the scrapy-user-agents:

pip install scrapy-user-agents

Let’s start the project:

scrapy startproject elextra

Open elextra/settings.py as well as insert the following:

DOWNLOADER_MIDDLEWARES = {

'scrapy.downloadermiddlewares.useragent.UserAgentMiddleware': None,

'scrapy_user_agents.middlewares.RandomUserAgentMiddleware': 400,

}

It is the configuration that you need to add while utilizing scrapy-user-agents.

After that, make a spider:

scrapy genspider elextraonline elextraonline.com

Then, open elextra/items.py as well as fill that using this code:

What we do here is that we define the containers of the data we scrape.

Open elextra/spiders/elextraonline.py and delete allowed_domain because we don’t require that for now. Just replace this item start_urls with:

start_urls = ['https://elextraonline.com/kategori/logitech-products/']

Here, we will extract all the Logitech products on the website.

Whenever you open a website, you could see this is paginated. We require to handle it. Just replace the parse function using this:

def parse(self, response, **kwargs):

# Handling pagination

next_page: str = response.css("a.next.page-number::attr('href')").get()

if next_page:

print(next_page)

yield response.follow(next_page, callback=self.parse)

You might get confused using this code:

response.css("a.next.page-number::attr('href')")

We utilize that code to get elements from a website with CSS selectors. We get any anchor elements using class next as well as page-number, and then scrape the href. You could get class of an element using an inspector tool within your browser.

We will go to next page till there are no next pages button anymore till the previous page.

After that, we will extract data from each product. We need to open each link of all products to have more data about the products. Let’s make a function to deal with it first:

def parse_product(self, response):

item: ElextraItem = ElextraItem()

item['name'] = response.css("h1.product-title.entry-title::text").get().strip()

item['price'] = response.css("span.woocommerce-Price-amount.amount::text").getall()[-1].strip()

item['image_link'] = response.css(

"img.attachment-shop_single.size-shop_single.wp-post-image::attr('src')").get().strip()

desc_selector = Selector(text=response.css("div#tab-description").get())

desc_text_list = desc_selector.xpath('//div//text()').getall()

desc = ''

for desc_text in desc_text_list:

desc += desc_text

desc = desc.replace('DESKRIPSI', '').strip()

description_result = response.css("div#tab-description > p::text").extract()

for res in description_result:

desc += res

item['description'] = desc

return item

The logic used here for using CSS selector is similar like before. We call a strip function given on strings for removing irregular white space to make our data cleaner.

The code for getting a product description is a bit complex. The problem is that there are no persistent elements, which has the description body. At times, they utilize p as well as they utilize ul. Therefore, what we perform here is, we get a general parent and then extract all the texts within whereas removing the needless ones like text DESKRIPSI.

Coming back to parse function, just add that above this pagination section:

product_links: list = response.css("p.name.product-title > a::attr(href)")

for product in product_links:

href = product.extract()

yield response.follow(href, callback=self.parse_product)

Therefore, we open each page of product as well as parse that.

Your elextra/spiders/elextraonline.py needs to look like that now:

import scrapy

from scrapy import Selector

from ..items import ElextraItem

class ElextraonlineSpider(scrapy.Spider):

name = 'elextraonline'

start_urls = ['https://elextraonline.com/kategori/logitech-products/']

def parse(self, response, **kwargs):

product_links: list = response.css("p.name.product-title > a::attr(href)")

for product in product_links:

href = product.extract()

yield response.follow(href, callback=self.parse_product)

# Handling pagination

next_page: str = response.css("a.next.page-number::attr('href')").get()

if next_page:

print(next_page)

yield response.follow(next_page, callback=self.parse)

def parse_product(self, response):

item: ElextraItem = ElextraItem()

item['name'] = response.css("h1.product-title.entry-title::text").get().strip()

item['price'] = response.css("span.woocommerce-Price-amount.amount::text").getall()[-1].strip()

item['image_link'] = response.css(

"img.attachment-shop_single.size-shop_single.wp-post-image::attr('src')").get().strip()

desc_selector = Selector(text=response.css("div#tab-description").get())

desc_text_list = desc_selector.xpath('//div//text()').getall()

desc = ''

for desc_text in desc_text_list:

desc += desc_text

desc = desc.replace('DESKRIPSI', '').strip()

description_result = response.css("div#tab-description > p::text").extract()

for res in description_result:

desc += res

item['description'] = desc

return item

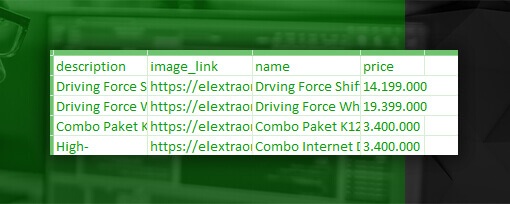

Export Results

Run the given command to get the scraping results to the CSV file:

scrapy crawl elextraonline -o result.csv -t csv

The result.csv will look like:

It’s done! You don’t require to follow any exact website like this. Treat that as a fundamental building block for own project. We hope you will find that useful.

For more information, contact Retailgators now or ask for a free quote!

Leave a Reply

Your email address will not be published. Required fields are marked